On my way to EuroSTAR 2012 I was starting to think about the Cynefin model, and landscape diagrams which I know from giving some courses. I tried to relate them to software testing, different techniques, and I was not sure where this could lead me.

I had some exchanges with Michael Bolton, Bart Knaack and Huib Schoots on my early draft, and I wanted to share what I had ended up with. So, here it is.

Initially I had the idea to label the x-axis with skills, or rather required skills, and the y-axis with domain knowledge. While talking to Michael Bolton he convinced me that I am probably looking for something else, and that the problem I try to solve with this tool would provide information on how to label the axis.

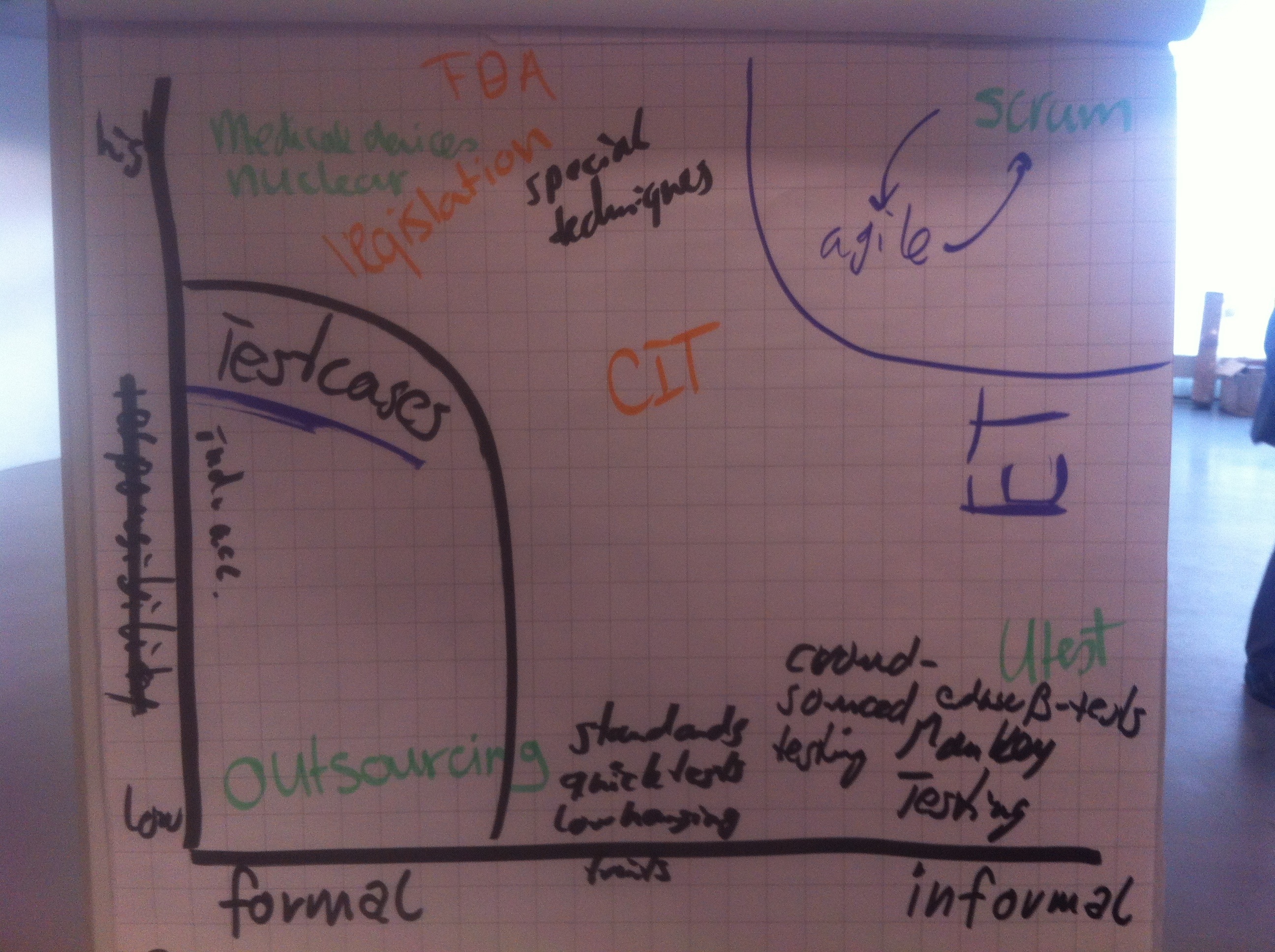

We ended up with formality on the x-axis with very formal methods to the left, and informal to the right. On the y-axis we ended up with the amount of individual accountability for the testing being done.

The problem I think I try to solve with this tool is to make transparent when test cases, and other more formal and traditional test approaches can be useful, and when other more lightweight or informal approaches are useful, like things we do in Agile testing or when using Exploratory testing. The landscape diagram then becomes a tool to have a conversation about the degree of formality necessary in a given context – hopefully (we haven’t applied it at a client so far, just pin-pointed some ideas back and forth).

Later I had a discussion on a flipchart with Bart Knaack and Huib Schoots extending on it. We started with the basis model I had discussed with Michael Bolton in the morning, and Bart and Huib provided some great input into that. We ended with the following flip chart, hoping to get some extensions over the course of the conference.

As you can see we went away from the idea to put test cases into context of something like that, more in the direction, what type of bubbles do you apply in a situation where you have informal testing with low accountability for the individual tester, or what happens if you have high accountability for the individual tester, and have more informal testing needed to be done.

We started with different companies, and types of projects. Agile and Scrum in this sense ended up in the top right quadrant of the landscape, while more regulated environments ended up in the top left corner. Factory-testing ended up more in the lower left corner.

I don’t think this discussion about the landscape is over, yet. Instead I hope that this will trigger some discussions in the direction of putting testing more into context, so that we as a profession can get over the “it depends” trap that I think we currently fall into too often.

very interesting – a model I can relate to :-)

Consider though, is outsourcing always low “personal accountability”. IT services and outsourcing is a way of delegating cost, tasks and responsibility (been-there-done-that). If google is using contracters -not their own staff- to test google maps (+ in the wild), that is a form of outsourcing. Is outsourcing always formal? Consider tissue testers of eorustar 2010 game industry keynote. I know subject matter experts with high personal accountability that by mere chance are outsourced. Also if a medico shop outsource their FDA testing activity to someone else – what is it then?

Context matters and models fail *lol* – keep tossing the idea, it’s good! /Jesper